Yeah, that is most likely not overly shocking, but it surely nonetheless serves as a useful reminder as to the constraints of the present wave of generative AI search instruments, which social apps are actually pushing you to make use of at each flip.

In response to a new examine performed by the Tow Middle for Digital Journalism, many of the main AI engines like google fail to supply appropriate citations of stories articles inside queries, with the instruments usually making up reference hyperlinks, or just not offering a solution when questioned on a supply.

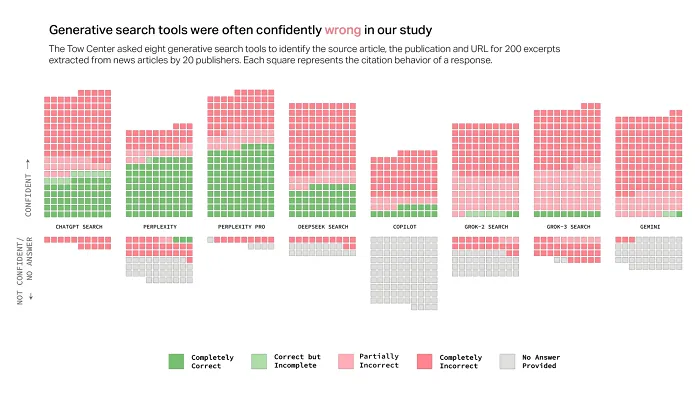

As you possibly can see on this chart, many of the main AI chatbots weren’t notably good at offering related citations, with xAI’s Grok chatbot, which Elon Musk has touted because the “most truthful” AI, being among the many most inaccurate or unreliable assets on this respect.

As per the report:

“General, the chatbots offered incorrect solutions to greater than 60% of queries. Throughout totally different platforms, the extent of inaccuracy various, with Perplexity answering 37% of the queries incorrectly, whereas Grok 3 had a a lot larger error charge, answering 94% of the queries incorrectly.”

On one other entrance, the report discovered that, in lots of circumstances, these instruments had been usually in a position to present info from sources which have been locked right down to AI scraping:

“On some events, the chatbots both incorrectly answered or declined to reply queries from publishers that permitted them to entry their content material. However, they generally appropriately answered queries about publishers whose content material they shouldn’t have had entry to.”

Which means that some AI suppliers will not be respecting the robots.txt instructions that block them from accessing copyright protected works.

However the topline concern pertains to the reliability of AI instruments, that are more and more getting used as engines like google by a rising variety of internet customers. Certainly, many children are actually rising up with ChatGPT as their analysis device of alternative, and insights like this present that you just can not depend on AI instruments to provide you correct info, and educate you on key matters in any dependable method.

In fact, that’s not information, as such. Anyone who’s used an AI chatbot will know that the responses will not be at all times priceless, or usable in any method. However once more, the priority is extra that we’re selling these instruments as a substitute for precise analysis, and a shortcut to information, and for youthful customers particularly, that would result in a brand new age of ill-informed, much less outfitted folks, who outsource their very own logic to those techniques.

Businessman Mark Cuban summed this downside up fairly precisely in a session at SXSW this week:

“AI isn’t the reply. AI is the device. No matter expertise you could have, you need to use AI to amplify them.”

Cuban’s level is that whereas AI instruments may give you an edge, and everybody must be contemplating how they’ll use them to reinforce their efficiency, they don’t seem to be options in themselves.

AI can create video for you, however it could’t give you a narrative, which is probably the most compelling component. AI can produce code that’ll enable you to construct an app, however it could’t construct the precise app itself.

That is the place you want your individual important pondering expertise and talents to develop these components into one thing larger, and whereas AI outputs will certainly assist on this respect, they don’t seem to be a solution in themselves.

The priority on this specific case is that we’re displaying children that AI instruments may give them solutions, which the analysis has repeatedly proven it’s not notably good at.

What we want is for folks to grasp how these techniques can prolong their skills, not change them, and that to get probably the most out of those techniques, you first have to have key analysis and analytical expertise, in addition to experience in associated fields.