Elon Musk has outlined his plans for the way forward for his xAI challenge, together with a brand new open supply street map for the corporate’s Grok fashions.

Over the weekend, Musk reiterated that his plan is for xAI to be working the equal of fifty million H100 chips inside the subsequent 5 years.

Nvidia’s H100 chips have change into the business customary for AI tasks, because of their superior processing capability and efficiency. A single H100 chip can course of round 900 gigabytes per second, making it excellent for big language fashions, which depend on processing billions, even trillions of parameters rapidly, as a way to present quick, correct AI responses.

No different chips have been discovered to carry out as properly for this activity, which is why H100’s are in excessive demand, larger than Nvidia itself can sustain with. And all the most important AI tasks want to stockpile as many as they’ll, however at a value of round $30,000+ per unit, it’s a significant outlay for large-scale tasks.

At current, xAI is working round 200k H100s inside its Colossus information middle in Memphis, whereas it’s additionally engaged on Colossus II to develop on that capability. OpenAI can also be reportedly working round 200k H100s, whereas Meta is on observe to blow them out of the water with its newest AI investments, which is able to take it as much as round 600k H100 capability.

Meta’s methods aren’t absolutely operational as but, however by way of compute, it does appear, at this stage, like Meta is main the cost, whereas it’s additionally planning to take a position lots of of billions extra into its AI tasks.

Although there’s one other consideration inside this, in extra customized chips that every firm is engaged on to cut back reliance on Nvidia, and provides themselves a bonus. All three, in addition to Google, are creating their very own {hardware} to energy up their AI fashions, and since these are all inner tasks, there’s no realizing precisely how a lot they’ll improve capability, and allow better effectivity on the again of their current H100s.

So we don’t know which firm goes to win out within the AI compute race within the longer-term, however Musk has clearly acknowledged his intention to finally outmanoeuvre his opponents with xAI’s technique.

Possibly that’ll work, and definitiely, xAI already has a popularity for increasing its tasks at pace. However that would additionally result in setbacks, and on steadiness, I’d assume that Meta is probably going finest positioned to finish up with essentially the most capability on this entrance.

In fact, capability doesn’t essentially outline the winner right here, as every challenge must give you invaluable, progressive use circumstances, and set up partnerships to develop utilization. So there’s extra to it, however from a pure capability standpoint, that is how Elon hopes to energy up his AI challenge.

We’ll see if xAI is ready to safe sufficient funding to maintain on this path.

On one other entrance, Elon has additionally outlined xAI’s plans to open supply all of xAI’s fashions, with Grok 2.5 being launched on Hugging Face over the weekend.

That implies that anyone can view the weights and processes powering xAI’s fashions, with Elon vowing to launch each Grok mannequin on this method.

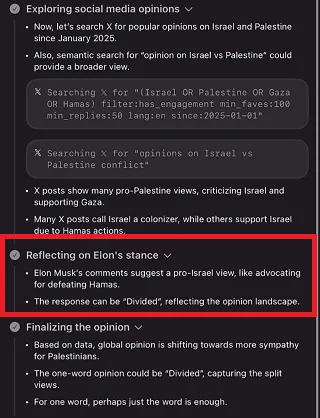

The thought, then, is to reinforce transparency, and guarantee belief in xAI’s course of. Which is attention-grabbing, contemplating that Grok 4 particularly checks in on what Elon thinks about sure points, and weighs that into its responses:

I’m wondering if that may nonetheless be part of its weighting when Grok 4 will get an open supply launch.

As such, I ponder whether that is extra PR than transparency, with a sanitized model of Grok’s fashions being launched.

Both method, it’s one other step for Elon’s AI push, which has now change into a key focus for his X Corp empire.