I notice that it is a controversial consideration, for a lot of causes, and that it’s going to doubtless by no means achieve traction, and perhaps it shouldn’t. However perhaps there are advantages to implementing extra stringent controls over social media algorithms, and limitations on what can and may’t be boosted by them, in an effort to handle the fixed amplification of rage-baiting, which is clearly inflicting huge divides in Western society.

The U.S., in fact, is the prime instance of this, with extremist social media personalities now driving huge divides in society. Such commentators are successfully incentivized by algorithmic distribution; Social media algorithms goal to drive extra engagement, in an effort to preserve folks utilizing their respective apps extra typically, and the largest drivers of engagement are posts that spark robust emotional response.

Numerous research have proven that the feelings that drive the strongest response, significantly when it comes to social media feedback, are anger, concern and pleasure. Although of the three, anger has essentially the most viral potential.

“Anger is extra contagious than pleasure, indicating that it may possibly drive extra indignant follow-up tweets and anger prefers weaker ties than pleasure for the dissemination in social community, indicating that it may possibly penetrate completely different communities and break native traps by extra sharing between strangers.”

So anger is extra prone to unfold to different communities, which is why algorithmic incentive is such a significant concern on this respect, as a result of algorithmic equations, which aren’t capable of consider human emotion, will amplify no matter’s driving the largest response, and present that to extra customers. The system doesn’t know what it’s boosting, all it’s assessing is response, with the binary logic being that if lots of people are speaking about this topic/concern/submit, then perhaps extra folks might be eager about seeing the identical, and including their very own ideas as properly.

This can be a main concern with the present digital media panorama, that algorithmic amplification, by design, drives extra angst and division, as a result of that’s inadvertently what it’s designed to do. And whereas social platforms at the moment are making an attempt so as to add in a stage of human enter into this course of, in approaches like Group Notes, which append human-curated contextual tips that could such traits, that received’t do something to counter the mass-amplification of divisive content material, which once more, creators and publishers are incentivized to create in an effort to maximize attain and response.

There’s no technique to handle such inside purely performance-driven algorithmic techniques. However what if the algorithms had been designed to particularly amplify extra constructive content material, and cut back the attain of much less socially helpful materials?

That’s already taking place in China, with the Chinese language authorities implementing a stage of management over the algorithms in in style native apps, in an effort to be certain that extra constructive content material is amplified for extra folks.

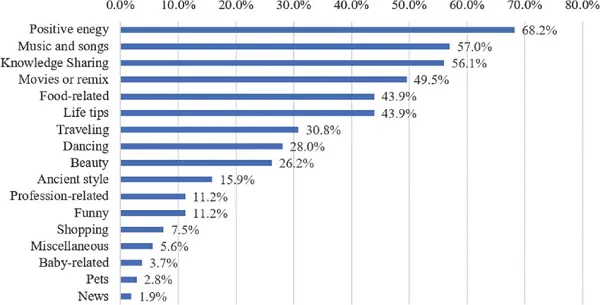

As you may see on this itemizing of the matters that see the very best charge of promotion on Douyin, which is the Chinese language native model of TikTok, among the many hottest matters are ‘constructive vitality’ and ‘data sharing,’ two matters that may be unlikely to get anyplace close to these ranges of comparative engagement on TikTok.

That’s as a result of the Chinese language authorities seeks to handle what younger individuals are uncovered to in social apps, with the concept being that by selling extra constructive traits, that’ll encourage the youth to aspire to extra helpful, socially beneficial parts.

Versus TikTok, wherein prank movies, and more and more, content material selling political discourse, are the norm. And with increasingly more folks now getting their information inputs from TikTok, particularly youthful audiences, that implies that the divisive nature of algorithmic amplification is already impacting children, and their views on how the world works. Or extra operatively, the way it doesn’t beneath the present system.

Some have even prompt that that is the primary goal of TikTok, that the Chinese language authorities is in search of to make use of TikTok to additional destabilize Western society, by seeding anti-social behaviors through TikTok clips. That’s slightly too conspiratorial for me, however it’s value noting the comparative division that such amplification conjures up, versus selling extra helpful content material.

To be clear, I’m not arguing that Western governments ought to be ruling social media algorithms in the best way that the CCP influences the traits in in style social apps in China. However there’s something to be mentioned for chaos versus cohesion, and the way social platform algorithms contribute to the confusion that drives such division, significantly amongst youthful audiences.

So what’s the reply? Ought to the U.S. authorities look to, say, take possession of Instagram and assert its personal affect over what folks see?

Effectively, on condition that U.S. President Donald Trump remarked final week that he would make TikTok’s algorithm “100% MAGA” if he may, that’s most likely lower than best. However there does appear to be a case for extra management over what traits in social apps, and for weighting sure, extra constructive actions extra closely, in an effort to improve understanding, versus undermining it.

The issue is there’s no arbitrator that anybody can belief to do that. Once more, for those who belief the sitting authorities of the time to regulate such, then they’re prone to angle these traits to their very own profit, whereas such an strategy would additionally require variable approaches in every area, which might be more and more tough to handle and belief.

You may look to let broad governing our bodies, just like the European Union, handle such on a broader scale, although EU regulators have already induced important disruption by their evolving huge tech rules, for questionable profit. Would they be any higher at managing constructive and adverse traits in social apps?

And naturally, all of that is implementing a stage of bias, which many individuals have been opposing for years. Elon Musk ostensibly bought Twitter for this precise cause, to cease the Liberal bias in social media apps. And whereas he’s since tilted the stability the opposite means, the Twitter/X instance is a transparent demonstration of how personal possession can’t be trusted to get this proper, someway.

So how do you repair it? Clearly, there’s a stage of division inside Western society which is resulting in main adverse repercussions, and plenty of that’s being pushed by the continuing demonization of teams of individuals on-line.

For example of this in observe, I’m positive that everyone is aware of no less than one one that posts about their dislike for minority teams on-line, but that very same particular person most likely additionally is aware of folks of their actual life who’re a part of those self same teams that they vilify, and so they don’t have any drawback with them in any respect.

That disconnect is the difficulty, that who individuals are in actual life will not be who they painting on-line, and that broad generalization on this means will not be indicative of the particular human expertise. But algorithmic incentives push folks to be any individual else, pushed by the dopamine hits that they get from likes and feedback, inflaming sore spots of division for the advantage of the platforms themselves.

Possibly, then, algorithms be eradicated completely. That could possibly be a partial resolution, although the identical emotional incentives additionally drive sharing behaviors. So whereas eradicating algorithmic amplification may cut back the facility of engagement-driven techniques, folks would nonetheless be incentivized, to a lesser diploma, to spice up extra emotionally-charged narratives and views.

You’ll nonetheless, for instance, see folks sharing clips from Alex Jones wherein he intentionally says controversial issues. However perhaps, with out the algorithmic boosting of such, that may have an effect.

Folks today are additionally way more technically savvy total, and would have the ability to navigate on-line areas with out algorithmic helps. However then once more, platforms like TikTok, that are completely pushed by alerts which might be outlined by your viewing habits, have modified the paradigm for a way social platforms work, with customers now way more attuned to letting the system present them what they wish to see, versus having to hunt it out for themselves.

Eradicating algorithms would additionally see the platforms endure huge drops in engagement, and thus, advert income consequently. Which could possibly be an even bigger concern when it comes to proscribing commerce, and social platforms now even have their very own armies of lobbyists in Washington to oppose any such proposal.

So if social platforms, or some larger arbitrator, can not intervene, and downgrade sure matters, whereas boosting others, within the mould of the CCP strategy, there doesn’t appear to be a solution. Which implies that the heightened sense of emotional response to each concern that comes up is ready to drive social discourse into the longer term.

That most likely implies that the present state of division is the norm, as a result of with a basic public that more and more depends on social media to maintain it knowledgeable, they’re additionally going to maintain being manipulated by such, primarily based on the foundational algorithmic incentives.

With no stage of intervention, there’s no means round this, and given the opposition to even the suggestion of such interference, in addition to the dearth of solutions on the way it could be utilized, you may count on to maintain being angered by the newest information.

As a result of that emotion that you simply really feel while you learn every headline and scorching take is the entire level.